ATIAM Internship Summer 2022

Lab: Sony CSL

Supervisor: Gaëtan Hadjeres

Report

Abstract

Many musicians use audio effects to shape their sound to the point that these effects become part of their sound identity. However, configuring audio effects often requires expert knowledge to find the correct settings to reach the desired sound. In this report, we present a novel method to automatically recognize the effect present in a reference sound and find parameters that allow to reproduce its timbre. This tool aims at helping artists during their creative process to quickly configure effects to reproduce a chosen sound, making it easier to explore similar sounds afterwards, similarly to what presets offer but in a much more flexible manner.

We implement a classification algorithm to recognize the audio effect used on a guitar sound and reach up to 95 \% accuracy on the test set. This classifier is also compiled and can be used as a standalone plugin to analyze a sound and automatically instanciate the correct effect. We also propose a pipeline to generate a synthetic dataset of guitar sounds processed with randomly configured effects at speeds unreachable before. We use that dataset to train different neural networks to automatically retrieve effect’s parameters. We demonstrate that a feature-based approach with typical Music Information Retrieval (MIR) features compete with a larger Convolutional Neural Network (CNN) trained on audio spectrograms while being faster to train and requiring far less parameters. Contrary to the existing literature, making the effects we use differentiable does not allow to improve the performance of our networks which already propose fair reproduction of unseen audio effects when trained only in a supervised manner on a loss on the parameters. We further complete our results with an online perceptual experiment that shows that the proposed approach yields sound matches that are much better than using random parameters, suggesting that this technique is indeed promising and that any audio effect could be reproduced by a correctly configured generic effect.

Keywords: audio effects, music information retrieval, differentiable signal processing, sound matching, computer music;

Dataset

This work is based on the Audio Effects Dataset released by Fraunhofer IDMT in 2010. It is available here and has been created for the purpose of the classification of audio effects on polyphonic and monophonic bass and guitar recordings. See the published paper for more information:

Stein, Michael, et al. “Automatic detection of audio effects in guitar and bass recordings.” Audio Engineering Society Convention 128. Audio Engineering Society, 2010.

Example sounds from the dataset

| Clean | Feedback Delay |

| Slapback Delay | Reverb |

| Chorus | Flanger |

| Phaser | Tremolo |

| Vibrato | Overdrive |

| Distortion | |

Synthetic sounds

A synthetic dataset is obtained by processing clean audio from the IDMT Dataset with the pedalboard library.

- Modulation sounds are obtained with the

pedalboard.Chorusplugin; - Delay sounds with the

pedalboard.Delayplugin; - Distortion sounds with an effect chain of

pedalboard.Distortion,pedalboard.LowShelfFilterandpedalboard.HighShelfFilter.

Example sounds for each of those are available below:

| Modulation | Delay | Distortion |

AutoFx

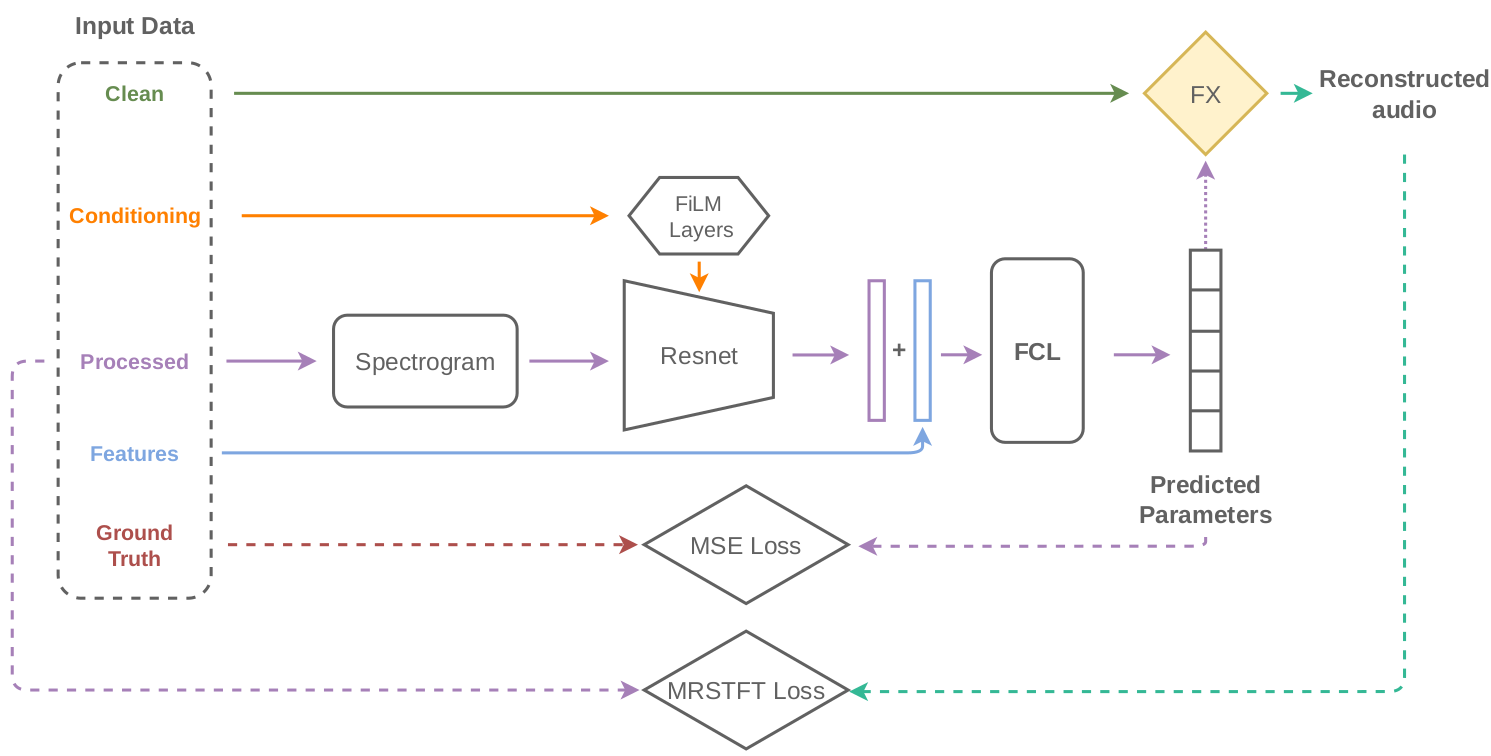

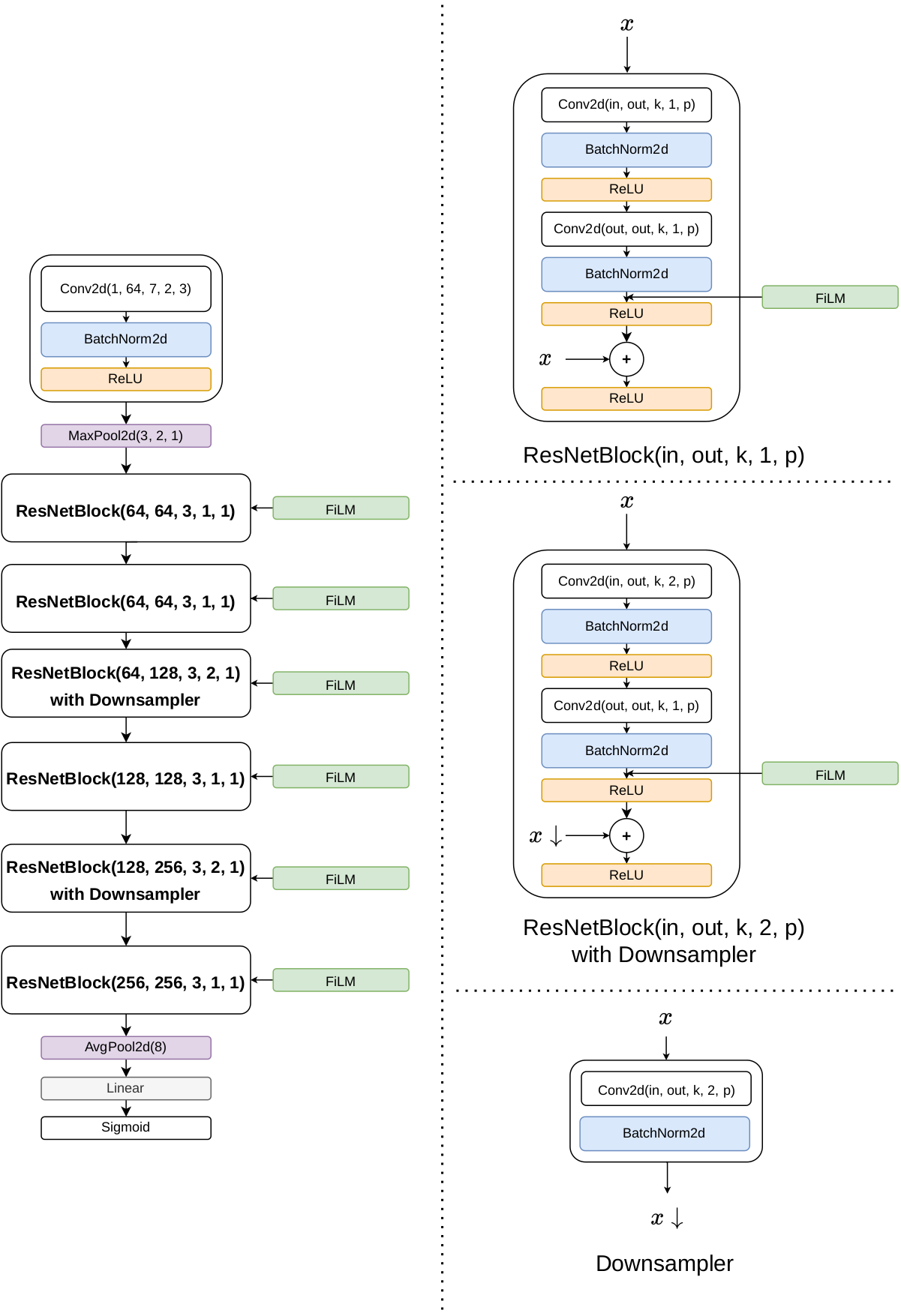

The implemented architecture for the regression of effects’ parameters is dubbed AutoFx and trained according to the following procedure:

The network architecture being:

Reconstruction of synthetic sounds is proposed below:

| Original | Reconstruction |

| Original | Reconstruction |

| Original | Reconstruction |

Reconstruction of Out-of-domain sounds

The following sounds are taken from the online perceptual experiment that was conducted.

| Reference | 163-NC | 163-C | 211-C | AutoFX | AutoFX-F |

| Reference | 163-NC | 163-C | 211-C | AutoFX | AutoFX-F |

| Reference | 163-NC | 163-C | 211-C | AutoFX | AutoFX-F |